Introduction

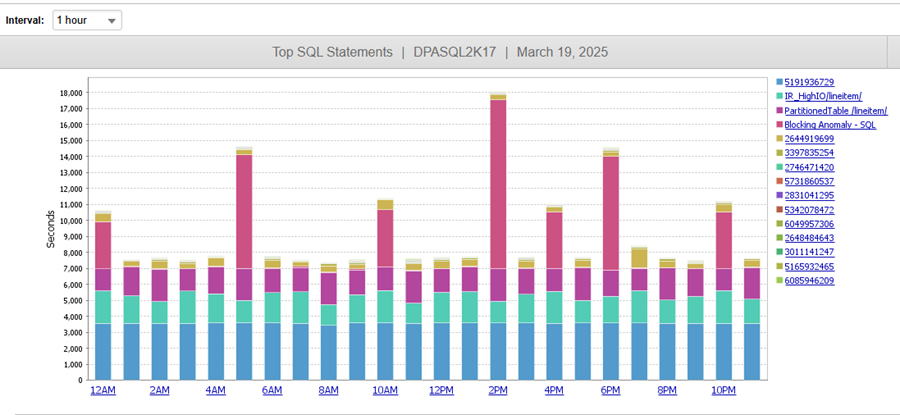

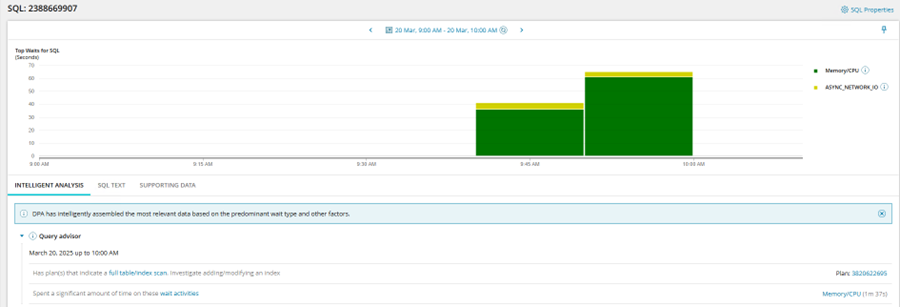

Typical performance bottleneck as observed in DPA

As database professionals, we’re always chasing performance. But good performance doesn’t happen automatically. Database-centric applications require a well-planned schema, effective indexing, and smart SQL queries. Since SQL is a declarative language, it leaves the optimizer in charge, which doesn’t always result in the most efficient execution.

Anyone who wants to get really good at SQL performance tuning learns to dig deeper. We read execution plans, analyze wait statistics, and fine-tune indexing or query structure to help the optimizer make better choices. It’s a process of translating technical telemetry into practical improvements in pursuit of a faster, healthier database.

But what if we could accelerate this process? What if we could pair SolarWinds® Database Performance Analyzer (DPA)—a trusted tool for deep database visibility—with an AI assistant that explains complex performance data in plain, human-readable language? That’s exactly what this article explores: combining DPA metrics with OpenAI to generate intelligent, contextual SQL performance recommendations that bridge the gap between raw data and actionable insight.

Why This Integration?

Why This Integration?

SolarWinds Database Performance Analyzer (DPA) already offers robust tools to help DBAs uncover the root causes of query delays. With its wait-time analytics, detailed execution plans, and precise I/O and wait metrics, DPA equips teams with everything needed to diagnose performance problems confidently.

However, interpreting those insights still requires manual expertise. Wouldn’t it be great if you had an assistant who could:

Automatically analyzing poor-performing SQL

Automatically analyzing poor-performing SQL

Summarizing execution plans in natural language

Summarizing execution plans in natural language

Recommending query rewrites where appropriate

Recommending query rewrites where appropriate

Translating performance data into actionable, human-readable suggestions

Translating performance data into actionable, human-readable suggestions

Manual analysis of execution plans can be time-consuming.

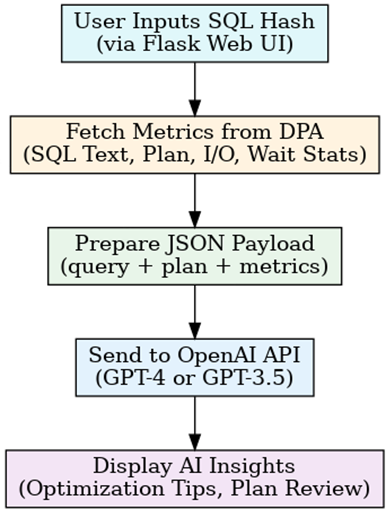

️ The Architecture: How it Works

️ The Architecture: How it Works

High-level architecture of DPA and OpenAI integration.

1. The user starts by entering a SQL Hash into a custom-built Flask web app.

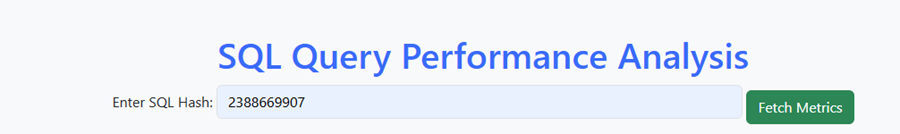

To make the process intuitive, I developed a lightweight web interface using the Flask framework in Python. This web UI accepts a SQL Hash, an identifier used by SolarWinds DPA to track a specific SQL query.

Behind the scenes, the app securely connects to the DPA repository database (MySQL, in my setup). You'll need a valid connection string, including hostname, port, username, and password, along with read permissions to specific DPA tables such as CONST_, CONSW_, conspt_, and CON_STATS_SUM_. These credentials can be managed using environment variables or a secure configuration file.

Once the user submits the SQL Hash, the app pulls all the relevant performance data needed for the analysis.

2 .The backend fetches SQL query performance data from DPA

Using the SQL Hash, the app queries the DPA repository to collect important metrics:

- The raw SQL text

- The execution plan in XML format (assembled from multiple rows if fragmented)

- I/O statistics like logical reads, physical reads, and rows processed

- Wait statistics such as ASYNC_NETWORK_IO, CPU, or MEMORY waits

These details represent a complete snapshot of the query’s runtime behavior.

3. The collected metrics are structured into a JSON payload.

All the fetched information—query text, execution plan, and performance stats—is organized into a structured JSON object. Before building the payload, the raw SQL query is sanitized to remove sensitive values (like literals or business-specific names), ensuring security, privacy, and clean input for the AI.

This step ensures OpenAI receives just enough structure to understand the query pattern, without exposing internal data.

4 .The JSON payload is sent to OpenAI for intelligent analysis.

Once the SQL metrics and execution plan are gathered, the app structures them into a JSON object and sends it to OpenAI’s GPT API (GPT-3.5 or GPT-4, depending on your setup).

But what truly unlocks the AI’s power is the prompt engineering.

How Prompting Works in This Integration

A prompt isn’t just a question; it’s the full context we provide to the AI model so it can respond with relevant, accurate advice. The quality of the insights depends greatly on how well we frame the request.

The crafted prompt includes:

- The raw SQL query (sanitized)

- The execution plan XML

- I/O metrics like logical/physical reads and rows processed

- Wait stats, highlighting time spent on CPU, memory, or network

Then we ask GPT to:

Interpret the execution plan structure

Interpret the execution plan structure Detect potential performance bottlenecks

Detect potential performance bottlenecks Recommend indexes or suggest schema changes

Recommend indexes or suggest schema changes Rewrite the query for better performance

Rewrite the query for better performance Explain key wait stats in plain English

Explain key wait stats in plain English

The result? Context-aware, human-readable advice tailored to your query’s runtime behavior.

Note: The better the prompt, the better the result. That’s why we include just enough technical detail to give GPT the full picture, without overwhelming it.

Note: The better the prompt, the better the result. That’s why we include just enough technical detail to give GPT the full picture, without overwhelming it.

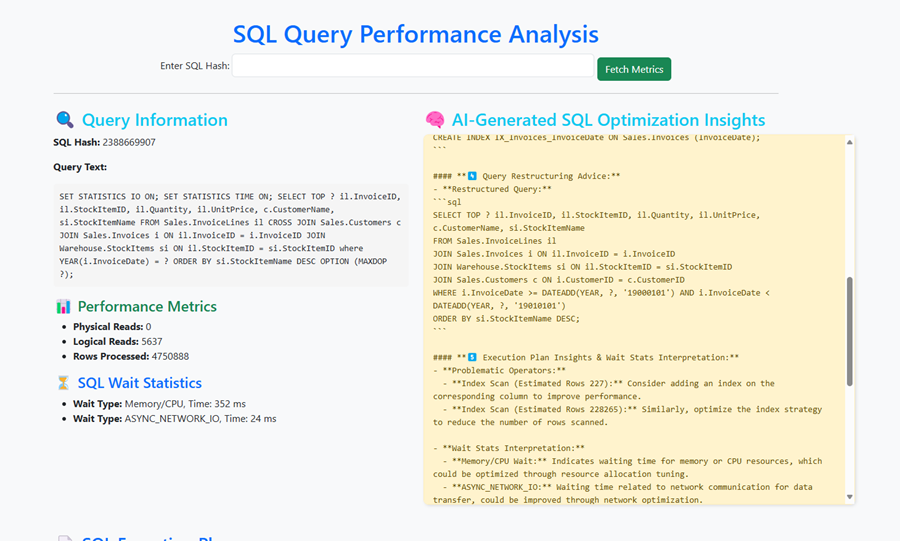

5.The AI-generated insights are displayed alongside DPA metrics.

Finally, the web app presents everything in a clean, two-pane layout. One panel shows the raw DPA metrics for transparency and validation. The other panel features OpenAI’s suggestions, formatted as plain-English explanations and optimization tips.

This separation helps users compare machine-generated advice with what they see from the metrics and make informed decisions.

To integrate OpenAI with SolarWinds DPA and leverage its powerful AI capabilities, follow the steps below to get started with the OpenAI API.

Getting Started

. Environment Setup: What You Need to Get Started

To successfully run this integration between SolarWinds DPA and OpenAI, you’ll need to configure a few essential components:

1. Python Environment

- Make sure Python 3.8+ is installed.

- Install required packages using pip:

2. DPA Repository Access

- Access to the SolarWinds DPA database (e.g., MySQL).

- Credentials (host, port, username, password) with read-only access to the following tables:

- COND (Database identifiers)

- CONST_<id> (SQL text)

- CONSW_<id> (Execution details)

- CONSPS_<id> (Query plan fragments)

- CON_STATS_SUM_<id> (I/O and wait stats)

3. Flask Web App Setup

- Clone or create your Flask web app.

- The app should include:

- Input field for SQL Hash

- Backend logic to connect to DPA, extract metrics, and render insights

- Routes for HTML rendering and optional .sqlplan file download

4. OpenAI API Configuration

Insight Unlocked: Interpreting AI-Powered SQL Tuning Results

For this demonstration, I’m using Microsoft’s WideWorldImporters sample database to showcase the integration of SolarWinds DPA with OpenAI for intelligent query tuning.

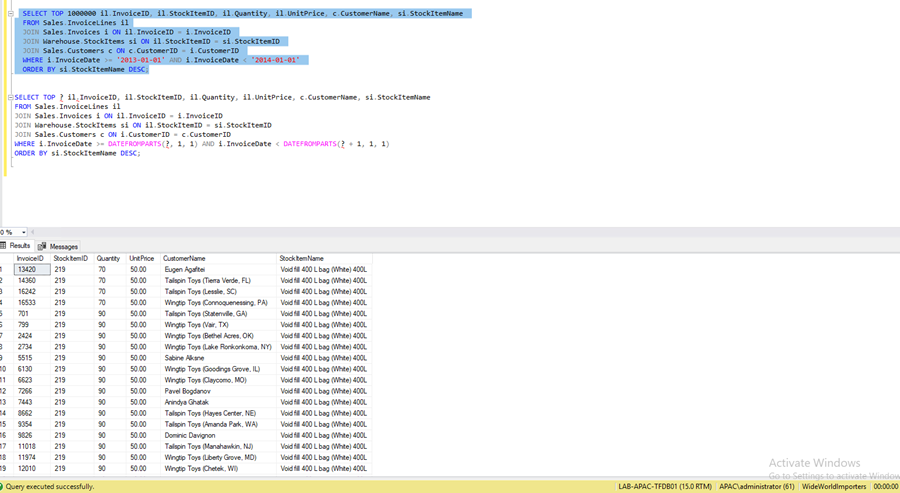

Let’s begin with a sample query that, in its current form, takes approximately 40 seconds to execute:

SELECT TOP 1000000 il.InvoiceID, il.StockItemID, il.Quantity, il.UnitPrice, c.CustomerName, si.StockItemName

FROM Sales.InvoiceLines il

CROSS JOIN Sales.Customers c

JOIN Sales.Invoices i ON il.InvoiceID = i.InvoiceID

JOIN Warehouse.StockItems si ON il.StockItemID = si.StockItemID

where YEAR(i.InvoiceDate) = 2013

ORDER BY si.StockItemName DESC

OPTION (MAXDOP 1);

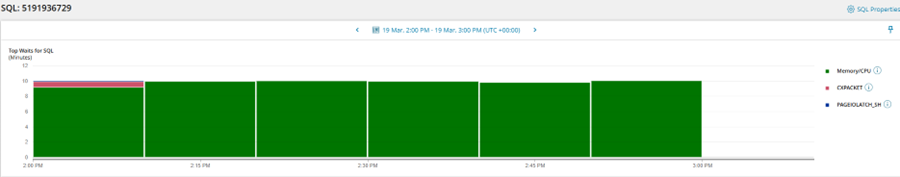

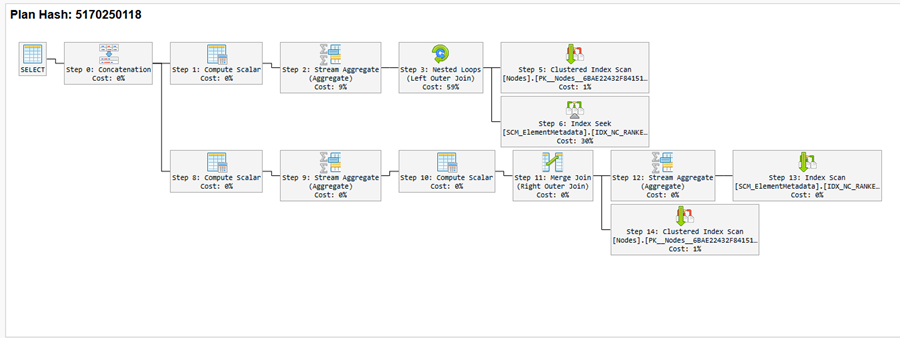

SolarWinds DPA successfully captures all key performance details related to this query—execution plan, I/O statistics, and wait events—providing a rich dataset for analysis.

Once this information is collected, I pass the query text, execution plan, wait statistics, and row processing metrics to OpenAI for further interpretation.

The results? OpenAI adds contextual intelligence to raw metrics, turning them into clear, human-readable insights. It not only identified inefficiencies in the query structure but also recommended an optimized version of the query:

SELECT TOP ? il.InvoiceID, il.StockItemID, il.Quantity, il.UnitPrice, c.CustomerName, si.StockItemName

FROM Sales.InvoiceLines il

JOIN Sales.Invoices i ON il.InvoiceID = i.InvoiceID

JOIN Warehouse.StockItems si ON il.StockItemID = si.StockItemID

JOIN Sales.Customers c ON i.CustomerID = c.CustomerID

WHERE i.InvoiceDate >= DATEFROMPARTS(?, 1, 1)

AND i.InvoiceDate < DATEFROMPARTS(? + 1, 1, 1)

ORDER BY si.StockItemName DESC;

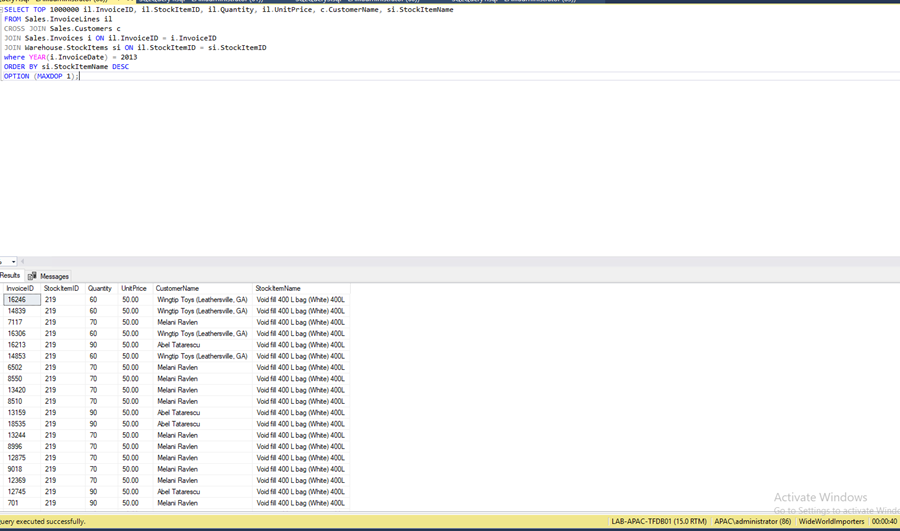

After substituting the parameter placeholders appropriately, here’s the rewritten query that I tested:

SELECT TOP 1000000 il.InvoiceID, il.StockItemID, il.Quantity, il.UnitPrice, c.CustomerName, si.StockItemName

FROM Sales.InvoiceLines il

JOIN Sales.Invoices i ON il.InvoiceID = i.InvoiceID

JOIN Warehouse.StockItems si ON il.StockItemID = si.StockItemID

JOIN Sales.Customers c ON c.CustomerID = i.CustomerID

WHERE i.InvoiceDate >= '2013-01-01' AND i.InvoiceDate < '2014-01-01'

ORDER BY si.StockItemName DESC;

The query gets executed in less than a second.

AI-Identified Issues and Optimization Suggestions

Suggestion 1: CROSS JOIN Without a Join Condition

- The original query used a CROSS JOIN between InvoiceLines and Customers, producing a Cartesian product.

- This drastically increased the row count and execution time.

- Unless intentional, this join pattern is likely an oversight.

Suggestion 2: Non-Sargable Filter Using YEAR()

- Applying the YEAR() function to a column disables index usage, forcing table or index scans.

Fix: Replace with a sargable date range filter:

Fix: Replace with a sargable date range filter:

i.InvoiceDate >= '2013-01-01' AND i.InvoiceDate < '2014-01-01'

Suggestion 3: OPTION (MAXDOP 1)

- This hint disables parallelism, which can significantly reduce performance on large datasets.

- While sometimes necessary, it should be tested carefully.

Fix: The rewritten query removes the OPTION (MAXDOP 1) hint to enable parallel execution where appropriate.

Fix: The rewritten query removes the OPTION (MAXDOP 1) hint to enable parallel execution where appropriate.

Use AI Responsibly

While OpenAI can provide excellent suggestions, its results should always be reviewed and validated. Blindly applying AI recommendations can be risky. LLMs can misunderstand schemas, suggest invalid logic, or overlook constraints. Think of the AI as a junior DBA who needs supervision.

Conclusion

Conclusion

By combining the power of SolarWinds DPA and OpenAI, DBAs can reduce the cognitive overhead of tuning SQL. This hybrid approach enhances productivity, learning, and ultimately, database performance. It’s not about replacing humans, it’s about elevating them.

About the Author

Jameel Mohammed Monsoor is a Database Solutions Engineer with 16+ years of Database experience across finance, healthcare, and cloud platforms. Passionate about observability and performance, he believes AI and automation will define the next-gen DBA.